Intro to MML

Math for Machine Learning

Mon, Jan 13, 2025

What is Machine Learning?

Machine learning is a class of algorithms that learn from data.

Could you be more specific?

Machine learning algorithms are designed to make inferences from data to perform some general mission, rather than designed to perform a specific task.

Maybe some examples would help?

Classification

The general problem of classification is to assign an input into one of several classes. One important example is image classification.

In image classification,

- We don’t write a program from scratch that can classify all kinds of different images into all kinds of different categories.

- Rather, we write a general image classification algorithm that can be tweaked by a large number of parameters.

- When we want to use the program to perform a specific task, we feed it a slew of data (maybe even labeled data) that’s used to fine tune the parameters.

Example data

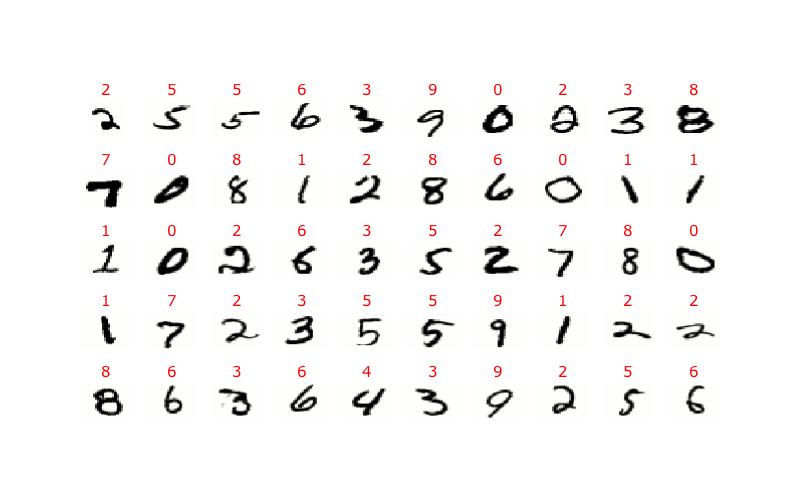

The digit recognizer demo is a good example of a classifier built from labeled data. A small sample of the labeled data looks like so:

Digit data and labels

The labeled digits on the previous slide are taken from the MNIST database, which consists of 60,000 training images and 10,000 test images.

Each digit is represented as a \(28\times28\) matrix of grayscale values generated by scanning an actual handwritten digit.

Associated with each image is a corresponding label indicating the intended value of the handwritten digit.

The idea is to use the training data to teach an algorithm how to read similarly written digits.

The test data is then used to evaluate how well the algorithm works.

Regression

While classification maps input into discrete categories, regression maps input into a continuous numerical range.

The NCAA Tournament demo is an example of regression, since it assigns a number between zero and one to each game.

Of course, you can round to obtain a 0 or a 1, indicating a win or a loss for the first team. Thus, there can be a connection between regression and classification.

Algorithms

Here are a few of the algorithms that we’ll take a look at this semester:

- Regression - Linear and Logistic

- K-nearest neighbor classification

- K-means clustering

- Google pagerank and Eigenrating

- Neural Networks

I’d love to also talk a bit about random forests and support vector machines but not sure if we will.

Types of ML algorithms

These algorithms generally fall into two categories:

- Supervised and

- Unsupervised

There can be overlap and sometimes both are used in the course of a problem, though.

Supervised learning

Supervised algorithms are generally built on labeled data. There may be a single label or several; they can be categorical or numerical.

The labels are used to guide us to the correct results during training; they are then used to evaluate the results during testing.

Linear regression, K-nearest neighbors, and neural networks are all examples of supervised algorithms.

Unsupervised learning

Unsupervised algorithms work without labels. Instead, they use the intrinsic structure of the data to find patterns to group or rate the data.

K-means clustering and eigenrating are examples of unsupervised algorithms.

Mathematics

All these algorithms are rooted in mathematics. The main objective of this course is to give you enough mathematical knowledge to understand the basics behind machine learning algorithms.

Mathematical topics

The key mathematical topics are:

- Single variable calculus,

- Linear algebra,

- The geometry of \(n\)-dimensional space,

- Multivariable calculus

- Probability theory and statistics.

That’s a lot, incorporating at least 4 or 5 separate math classes for a typically major in the sciences.

Thus, we’ll need to focus on conceptual understanding, computation, and basic algebraic manipulation - as opposed to intense algebraic manipulation and proofs.

Wrap up

Hopefully, we’ll tie all that math, code, and algorithmic analysis together reasonably well.