Floating point numbers and roundoff¶

We saw something like this ugliness already:

((0.1+0.2)+0.3) - (0.1+(0.2+0.3))

The book provides a similar example in the estimation of $$\cos(1) = \lim_{h\to0}\frac{\sin(1+h) - \sin(1-h)}{2h}.$$ Well, $\cos(1) \approx 0.54030230586813977$:

from numpy import cos

cos(1)

And I guess we could approximate the limit numerically as follows:

from numpy import sin

[(sin(1+h) - sin(1-h))/(2*h) for h in (10**-5,10**-10, 10**-15, 10**-20)]

Well, it seems disturbing that our estimates get worse with smaller values of h! The text provides a few more examples. These all have two ingredients in common:

- The difference of two numbers that are very close together must be treated with caution and

- The fact that real numbers are only represented to a finite precision must cause round off error.

This second issue is complicated by the fact that the computer's representation of numbers is not in base 10 but in base 2. Thus, numbers like 0.1, which you expect to be exactly representable, are not.

In spite of all this, computer error is not something to be feared but something to be understood.

Floating point numbers in general¶

Floating point numbers are represented in terms of a base $\beta$, a precision $p$, and an exponent $e$. If $\beta=10$ and $p=2$, then the number $0.034$ can be represented as $3.4\times 10^{-2}$. With this representation, its exponent is $e=-2$. With this specification, floating point numbers are not unique, as this same number can be represented as $34\times 10^{-3}$ or $0.34\times 10^{-1}$. Thus, we typically take a floating point number to have the specific form $$ \pm(d_0+d_1\beta^{-1}+\cdots+d_{p-1}\beta^{-(p-1)})\beta^e, $$ where each $d_i$ is an integer in $[0,\beta)$ and $d_0\neq0$. Such a representation is said to be a normalized floating point number.

Computers work more naturally in binary (or base 2) and most are hardwired this way. Thus, in the equation above, we have $\beta=2$ and each digit $d_i$ is zero or one. Taking this into account, here are some easily representable numbers with $p=5$ and $-5\leq e \leq 5$:

- $8 = 1.0000 \times 2^3 = 1000_{\dot 2}0$

- $\frac{1}{4} = 1.0000 \times 2^{-2} = 0_{\dot 2}01$

- $\frac{5}{16} = 1.0100 \times 2^{-2} = 0_{\dot 2}0101$

- $42 = 1.0101 \times 2^{5} = 101010_{\dot 2}$

64 bit IEEE numbers¶

The IEEE standard for double precision floating point numbers specifies that 64 bits be used to represent the number. Specifically, it requires

- $1$ bit for the sign $s$.

- $11$ bits for the exponent $e$ giving a range of $2^{11} = 2048$ choices for the exponent, which is assumed to be between $-1023$ and $1024$.

- $53$ bits for the significant or mantissa $c=1.d_1d_2\cdots d_{52}$.

Since the leading digit must be a $1$, we only need to store 52 digits. Given those choices for $s$, $e$, and $c$, our number is $$(-1)^s\times c \times \beta^e.$$ Since the exponent $e$ is expressed in binary with no sign, it might be more natural to think of it in the range $0\leq e < 2048$. In this case, we might write the number $$(-1)^s\times c \times \beta^{e-1023}.$$ Note that the standard leaves quite a bit freedom allowing differences and optimizations between implementations. Often, a few strings are reserved for special numbers or, more often, there are a few extra bits included.

As an example, suppose we'd like to compute the number with bit string $$1\,\,1010000000000000000000000000000000000000000000000101\,\,10100000101$$ To compute this, we might do the following:

e = 2**10 + 2**8 + 2**2 + 2**0

c = 1 + 2**-1 + 2**-3 + 2**-50 + 2**-52

s = 1

(-1)**s * c * 2.0**(e-1023)

Exploring numbers with Python¶

Python provides some useful tools for exploring floating point numbers. Two such tools are float.hex, which displays the hexadecimal expansion of a number and its inverse float.fromhex. For example, here's my computer's representation of 0.1:

float.hex(0.1)

This indicates that the internal representation of $0.1$ is $$2^{-4}\left(1+\sum_{k=1}^{12} \frac{9}{16^k} + \frac{10}{16^{13}}\right) \approx 0.10000000000000000555.$$

We can use this information to fully understand the discrepancy between

$(0.1+0.2)+0.3$ and $0.1+(0.2+0.3)$.

Not surprisingly, the hexadecimal representation of $0.2$ is

0x1.999999999999ap-3.

The hexadecimal representation of $0.3$ is 0x1.3333333333333p-2,

which to 17 digits is $0.29999999999999999$. The sum of

these three numbers yields $0.6000000000000001$, which is slightly larger than

the desired value of $0.6$. By contrast, the computer's computation of

$0.2+0.3$ yields exactly $0.5$, which is not surprising since $0.5$

easily representable in binary. Then, $0.1+0.5$ yields exactly the computer

representation of $0.6$ after rounding.

Rounding¶

Any set of floating point numbers is not algebraically closed. That is, you can do arithmetic with floating point numbers and potentially generate numbers not in your set. Given a real number x (perhaps generated via a computation with floating point numbers), the computer will generally store it as fl(x), which is the floating point number closest to x. This phenomenon is called rounding.

For example, when we compute $1/10$, we generate the number slighly larger than 0.1 that's above.

Distribution¶

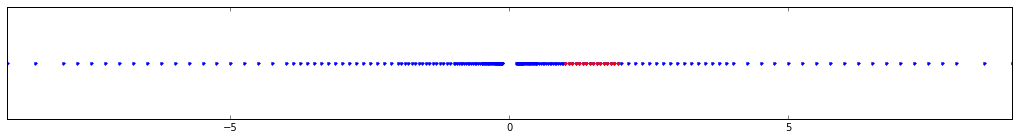

It's worth pointing out that normalized floating point numbers are not uniformly distributed. Let's plot, for example, the binary floating point numbers with precision 4 and maximum exponent size 3:

The numbers of the form $1.dddd$ (called lead_ones in the code) are shown in red. The other postive numbers have the form $2^nx$ for some $x$ with a leading 1. That leads to the scaling effect that we see.

Note, also, the hole near zero. That's because the smallest, postive, representable number (in this system) is $1.0000 \times 2^{-3} = 2^{-3}$. The next smallest number is $1.0001\times2^{-3} = (1+1/16)\times2^{-3}$ and the difference between these is quite a bit smaller than the smallest postive.

The difference between the smallest representable number that's larger than one and one is typically called machine epsilon.